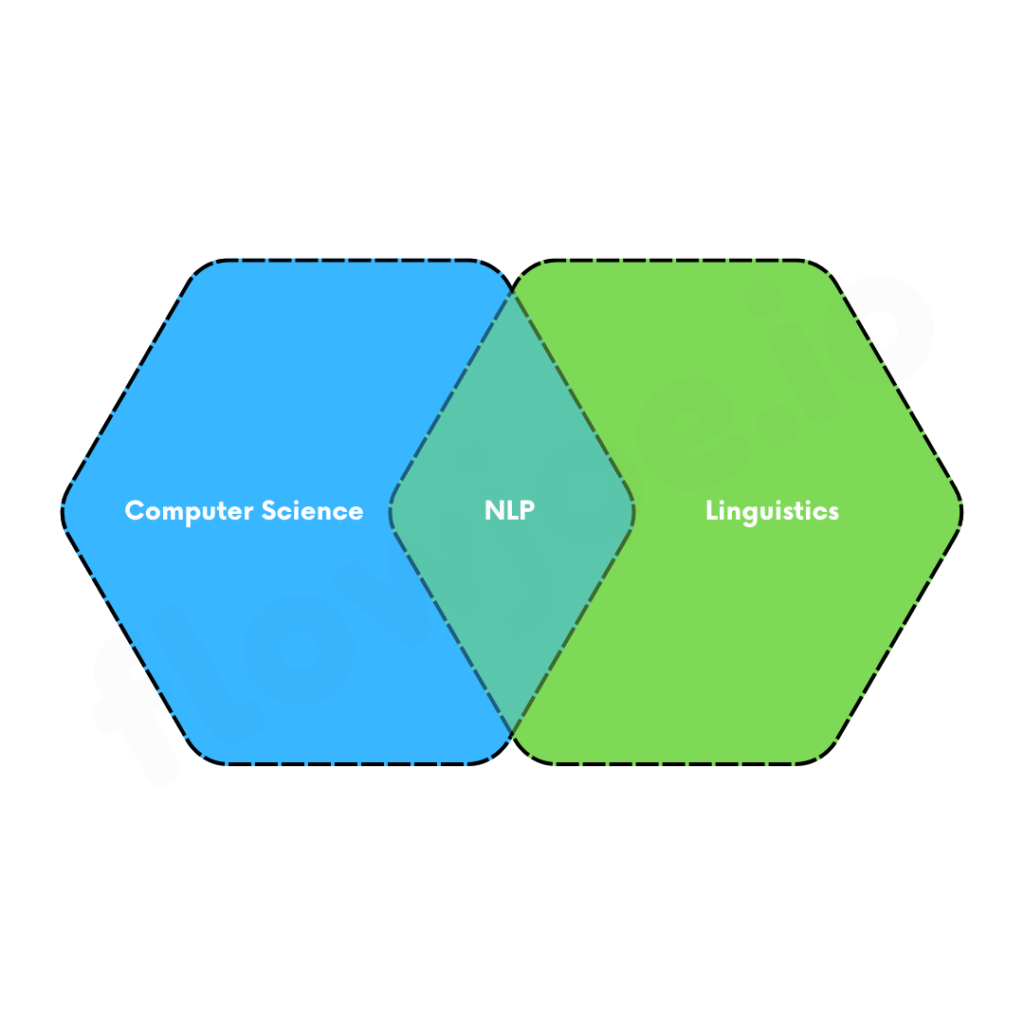

Natural Language Processing (NLP) is a field of artificial intelligence that bridges the gap between human language and computers. It enables machines to analyze, interpret, and even generate human language. From spam detection to real-time language translation, NLP applications are transforming how we interact with technology.

What is NLP?

At its core, NLP focuses on teaching machines to understand text and speech. Human language is inherently complex, filled with nuance, context, and structure. NLP works to make sense of this complexity by converting language into a form computers can process while maintaining meaning and intent.

Applications like virtual assistants, chatbots (think of Microsoft’s Copilot Studio), and sentiment analysis rely on NLP to process and understand natural language inputs effectively.

Applications of NLP

Text Classification: Text classification assigns predefined labels to text.

- Spam Detection: Automatically classifies emails as spam or not spam.

- News Categorization: Groups articles into categories like sports, business, or politics.

Example: A system that processes hundreds of articles daily to sort them by topic for easy navigation.

Sentiment Analysis: Sentiment analysis identifies the emotional tone of text, such as positive, negative, or neutral.

Example: Companies use sentiment analysis on social media posts to gauge consumer opinions about their products or brand.

Search and Question Answering: NLP enhances search systems by understanding context, not just matching keywords.

Example: A student asks, “How do I calculate the mean of a list?” A sophisticated NLP model understands that “mean” and “average” are the same and provides the correct answer, even if keywords differ.

Machine Translation: NLP powers applications like Google Translate, enabling seamless translations between languages.

Example: Translating English sentences into Spanish in real time for communication or learning.

Text Generation: NLP can generate meaningful text, such as auto-replies for emails or summaries of long documents.

Example: Creating automated responses to common customer service queries.

How NLP Works

To process language, machines must first convert text into numerical representations. This step allows models to analyze and understand text effectively. Several techniques are commonly used to achieve this.

One of the traditional methods is the Bag of Words (BoW) approach. It involves counting the occurrences of words in a predefined vocabulary. While this method is straightforward, it has significant limitations. BoW ignores the order of words and the relationships between them, which can lead to a loss of contextual information.

A more advanced approach is the use of word embeddings, which represent words as dense vectors that capture their meaning and context. For instance, words like “learn” and “study” would have similar embeddings because they share a related meaning. Word embeddings provide a more nuanced understanding of language compared to BoW. Development is often accelerated by leveraging pretrained models such as Word2Vec or GloVe, which are trained on massive text datasets.

The most modern and powerful method involves transformers and attention mechanisms. Transformers build on word embeddings by adding positional encodings and attention mechanisms, which allow them to capture the relationships between words, even those far apart in a sentence. The attention mechanism is particularly amazing, as it identifies how strongly words in a sentence relate to each other.

For example, in the sentence, “The cat didn’t eat because she was full” the attention mechanism understands that “she” refers to “cat” enabling the model to grasp context and meaning more effectively. These advancements have made transformers the backbone of many state-of-the-art NLP systems.

Deep Learning in NLP

Deep learning (DL) has become the foundation for advanced NLP applications. Neural networks, especially transformers like BERT and GPT, have set new standards for language understanding and generation. So, why is DL essential for NLP?

- Contextual Understanding: Deep learning models analyze word relationships and understand context better than traditional methods.

- Scalability: Pretrained models, such as those trained on massive datasets, can be fine-tuned for specific tasks like sentiment analysis or machine translation.

- Accuracy: Deep learning significantly outperforms older techniques, especially in complex tasks like text summarization or question answering.

Summary

Natural Language Processing (NLP) bridges the gap between human language and computers, enabling machines to analyze, interpret, and generate text with remarkable accuracy. From spam detection to sentiment analysis, NLP applications are reshaping how we interact with technology.

The evolution of NLP techniques, from traditional Bag of Words to modern transformers and attention mechanisms, has unlocked powerful capabilities for understanding context, relationships, and meaning in language. Combined with deep learning, NLP models now deliver amazing performance in tasks like translation, question answering & text generation.

Further Reading

Deep Learning